If you’re new here, you may want to subscribe to my RSS feed to receive the latest Architectradure’s articles in your reader or via email. Thanks for visiting!

The Ambient Peacock Explorer

I developed the Ambient Peacock Explorer as a framework for mobile units to document on their environment and report back to a central hub

I believe that new work in this area can physically substantiate the documentation through tagging, the incorporation of physical communities or other conceptual redefinitions of the environment one seeks to capture.

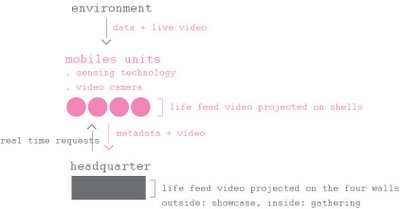

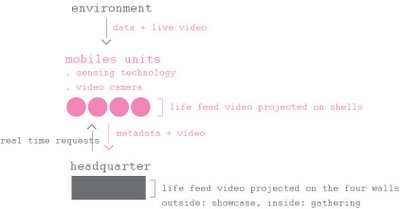

structure mobiles units + headquarter

mobile units independent from the headquarter

One shell per context of exploration. Shell inflated on top of the structure to indicate where the mobile unit is going.

Context based shells per unit

Water: Jelly Fish Organic Shell

Countryside: Wooden structure

City: Inflatable Concrete

Air: Blimp

headquater

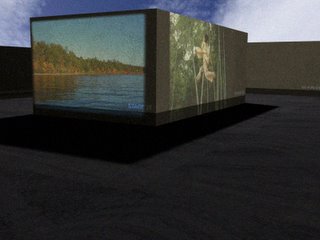

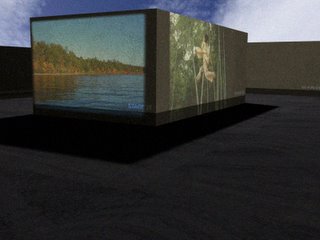

Is composed of four gathering areas: the air, the countryside, the city and the water area, a studio and an editing room. Each wall receives life feed from the mobile units based on each unit context. Environmental data from sensing mobile units are also projected on the walls as meta information. The headquarter itself retro-project on its roof the life feed of its environment and on the external walls displays the video from mobiles units. The production center also invites to discuss the documentaries and environmental issues and by that is also a showcase building.

technology specs

Live feed video camera from mobile units to headquarter. Each mobile unit is composed of one video camera connected via satellite to the headquarter. The life feed video camera is sent to the headquarter and projected onto the contextual area outside wall and inside wall (as part of the cafeteria gathering) area.

Video recording and metadata from mobile units to headquarter. Each mobile unit documents by recording visual environmental elements and use sensing technologies to combine video recorded and environmental data for later post production video retrieval. For instance GPS technology for location data retrieval, temperature, wind information and so forth. The production companies will retrieve video recordings of the mobile units and metadata associated to them.

Live feed video camera from mobile units onto mobile units. Each mobile unit would retro project their life feed footage onto their semi-transparent fabric structure to melt within its environment.

Headquarter data exchange with mobile units. Information and request coming from headquarter to define what to explore by real time exchange video footage. Scenario: if the mobile unit is in the air and crosses a bird migration, the headquarter could visualize it and request more detailed footage or more sensing environmental data coming from the bird migration.

communication system diagram

visual scenario of the ambient peacock explorer

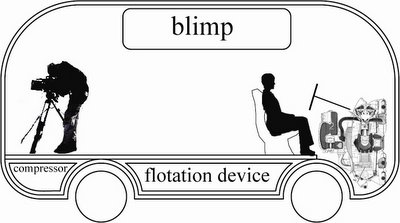

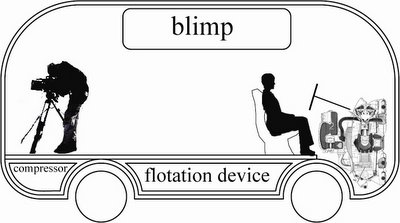

the blimp the air mobile unit.

The shell inflated on top of the structure indicates the mobile unit is going to document from above and in the air.

the mobile unit common to all contexts is controlled by two people. It has real time contact with the headquarter via satellite. One person controls the mobile unit and one gathers data, exchanges information, and prepare the unit to its environmental use. The unit consists of the inflatable blimp on top, the floatation device including the organic shell at the bottom, a projector to display environmental data inside each shell.

the mobile unit going into water.

The compressor is used for the floatation device and an organic semi transparent shell is added around the structure. The environmental life feed video is projected on the shell. The mobile unit is waterproof.

external view of the headquarter as a showcase.

Four walls: the air, the countryside, the city and the water. Each wall receives life feed from the mobile units based on each unit context.

The Ambient peacock explorer is a project I made with Philip Vriend for the Kinetic Architecture class, Assignment 2, November 2005.

By Cati in kinetic architecture

![]() Posted by Cati Vaucelle @ Architectradure

Posted by Cati Vaucelle @ Architectradure